I feel scared. Very scared.

Internet-wide surveillance and censorship, enabled by the unimaginably vast computational power of artificial intelligence (AI), is here.

This is not a futuristic dystopia. It’s happening now.

Government agencies are working with universities and nonprofits to use AI tools to surveil and censor content on the Internet.

This is not political or partisan. This is not about any particular opinion or idea.

What’s happening is that a tool powerful enough to surveil everything that’s said and done on the Internet (or large portions of it) is becoming available to the government to monitor all of us, all the time. And, based on that monitoring, the government – and any organization or company the government partners with – can then use the same tool to suppress, silence, and shut down whatever speech it doesn’t like.

But that’s not all. Using the same tool, the government and its public-private, “non-governmental” partners (think, for example: the World Health Organization, or Monsanto) can also shut down any activity that is linked to the Internet. Banking, buying, selling, teaching, learning, entertaining, connecting to each other – if the government-controlled AI does not like what you (or your kids!) say in a tweet or an email, it can shut down all of that for you.

Yes, we’ve seen this on a very local and politicized scale with, for example, the Canadian truckers.

But if we thought this type of activity could not, or would not, happen on a national (or even scarier – global) scale, we need to wake up right now and realize it’s happening, and it might not be stoppable.

New Documents Show Government-Funded AI Intended for Online Censorship

The US House Select Subcommittee on the Weaponization of the Federal Government was formed in January 2023 “to investigate matters related to the collection, analysis, dissemination, and use of information on US citizens by executive branch agencies, including whether such efforts are illegal, unconstitutional, or otherwise unethical.”

Unfortunately, the work of the committee is viewed, even by its own members, as largely political: Conservative lawmakers are investigating what they perceive to be the silencing of conservative voices by liberal-leaning government agencies.

Nevertheless, in its investigations, this committee has uncovered some astonishing documents related to government attempts to censor the speech of American citizens.

These documents have crucial and terrifying all-of-society implications.

In the Subcommittee’s interim report, dated February 5, 2024, documents show that academic and nonprofit groups are pitching a government agency on a plan to use AI “misinformation services” to censor content on internet platforms.

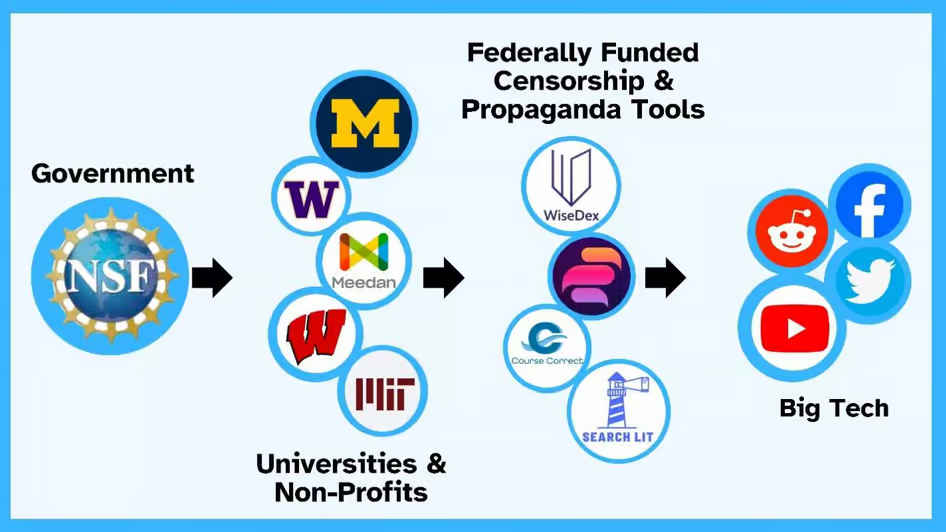

Specifically, the University of Michigan is explaining to the National Science Foundation (NSF) that the AI-powered tools funded by the NSF can be used to help social media platforms perform censorship activities without having to actually make the decisions on what should be censored.

Here’s how the relationship is visualized in the Subcommittee’s report:

Here’s a specific quote presented in the Subcommittee’s report. It comes from “Speaker’s notes from the University of Michigan’s first pitch to the National Science Foundation (NSF) about its NSF-funded, AI-powered WiseDex tool.” The notes are on file with the committee.

Our misinformation service helps policy makers at platforms who want to…push responsibility for difficult judgments to someone outside the company…by externalizing the difficult responsibility of censorship.

This is an extraordinary statement on so many levels:

1. It explicitly equates “misinformation service” with censorship.

This is a crucial equation, because governments worldwide are pretending to combat harmful misinformation when in fact they are passing massive censorship bills. The WEF declared “misinformation and disinformation” the “most severe global risks” in the next two years, which presumably means their biggest efforts will go toward censorship.

When a government contractor explicitly states that it is selling a “misinformation service” that helps online platforms “externalize censorship” – the two terms are acknowledged as being interchangeable.

2. It refers to censorship as a “responsibility.”

In other words, it assumes that part of what the platforms should be doing is censorship. Not protecting children from sex predators or innocent citizens from misinformation – just plain and simple, unadulterated censorship.

3. It states that the role of AI is to “externalize” the responsibility for censorship.

The Tech platforms do not want to make censorship decisions. The government wants to make those decisions but does not want to be seen as censoring. The AI tools allow the platforms to “externalize” the censorship decisions and the government to hide its censorship activities.

All of this should end the illusion that what governments around the world are calling “countering misinformation and hate speech” is not straight-up censorship.

What Happens When AI Censorship is Fully Implemented?

Knowing that the government is already paying for AI censorship tools, we have to wrap our minds around what this entails.

No manpower limits: As the Subcommittee report points out, the limits to government online censorship have, up to now, involved the large numbers of humans required to go through endless files and make censorship decisions. With AI, barely any humans need to be involved, and the amount of data that can be surveilled can be as vast as everything anyone says on a particular platform. That amount of data is incomprehensible to an individual human brain.

No one is responsible: One of the most frightening aspects of AI censorship is that when AI does it, there is no human being or organization – be it the government, the platforms, or the university/nonprofits – who is actually responsible for the censorship. Initially, humans feed the AI tool instructions for what categories or types of language to censor, but then the machine goes ahead and makes the case-by-case decisions all by itself.

No recourse for grievances: Once AI is unleashed with a set of censorship instructions, it will sweep up gazillions of online data points and apply censorship actions. If you want to contest an AI censorship action, you will have to talk to the machine. Maybe the platforms will employ humans to respond to appeals. But why would they do that, when they have AI that can automate those responses?

No protection for young people: One of the claims made by government censors is that we need to protect our children from harmful online information, like content that makes them anorexic, encourages them to commit suicide, turns them into ISIS terrorists, and so on. Also from sexual exploitation. These are all serious issues that deserve attention. But they are not nearly as dangerous to vast numbers of young people as AI censorship is.

The danger posed by AI censorship applies to all young people who spend a lot of time online, because it means their online activities and language can be monitored and used against them – maybe not now, but whenever the government decides to go after a particular type of language or behavior. This is a much greater danger to a much greater number of children than the danger posed by any specific content, because it encompasses all the activity they conduct online, touching on nearly every aspect of their lives.

Here’s an example to illustrate this danger: Let’s say your teenager plays lots of interactive video games online. Let’s say he happens to favor games designed by Chinese companies. Maybe he also watches others play those games, and participates in chats and discussion groups about those games, in which a lot of Chinese nationals also participate.

The government may decide next month, or next year, that anyone heavily engaged in Chinese-designed video games is a danger to democracy. This might result in shutting down your son’s social media accounts or denying him access to financial tools, like college loans. It might also involve flagging him on employment or dating websites as dangerous or undesirable. It might mean he is denied a passport or put on a watchlist.

Your teenager’s life just got a lot more difficult. Much more difficult than if he was exposed to an ISIS recruitment video or suicide-glorifying TikTok post. And this would happen on a much larger scale than the sexual exploitation the censors are using as a Trojan Horse for normalizing the idea of online government censorship.

Monetize-able censorship services: An AI tool owned by the government can theoretically be used by a non-governmental entity with the government’s permission, and with the blessing of the platforms that want to “externalize” the “responsibility” for censorship. So while the government might be using AI to monitor and suppress, let’s say as an example, anti-war sentiment – a company could use it to monitor and suppress, let’s say as an example, anti-fast food sentiment. The government could make a lot of money selling the services of the AI tools to 3rd parties. The platforms could also conceivably ask for a cut. Thus, AI censorship tools can potentially benefit the government, tech platforms, and private corporations. The incentives are so powerful, it’s almost impossible to imagine that they will not be exploited.

Can We Reverse Course?

I do not know how many government agencies and how many platforms are using AI censorship tools. I do not know how quickly they can scale up.

I do not know what tools we have at our disposal – other than raising awareness and trying to lobby politicians and file lawsuits to prevent government censorship and regulate the use of AI tools on the internet.

If anyone has any other ideas, now would be the time to implement them.

return to the use of letters if you want confidentiallity. I, for myself play stupid. I am old, (mentally deficient) and don t know how to use internet.

I still get all info i need on paper. So i have a physical trace of all things done.

And that includes tax return and bank operations

Absolutely. There is a huge and pressing need to keep it real, keep our feet on the ground and remember that “No” is a complete sentence. A need to make do and mend, fix what is broken, recycle old school appliances, vehicles and machinery rather than to continuously ‘upgrade’. Upgrade to what? and to where? nobody actually knows. Admen and women will always attempt to grift the dollar out of everything. The article correctly states: “Initially, humans feed the AI tool instructions”… but how much humanity do these so called ‘humans’ really possess? There is a huge need for real humans to step up and do what is right in all aspects of life and teach the watching ‘AI’s what proper behaviour is.